Using the DMF Service Node Appliance

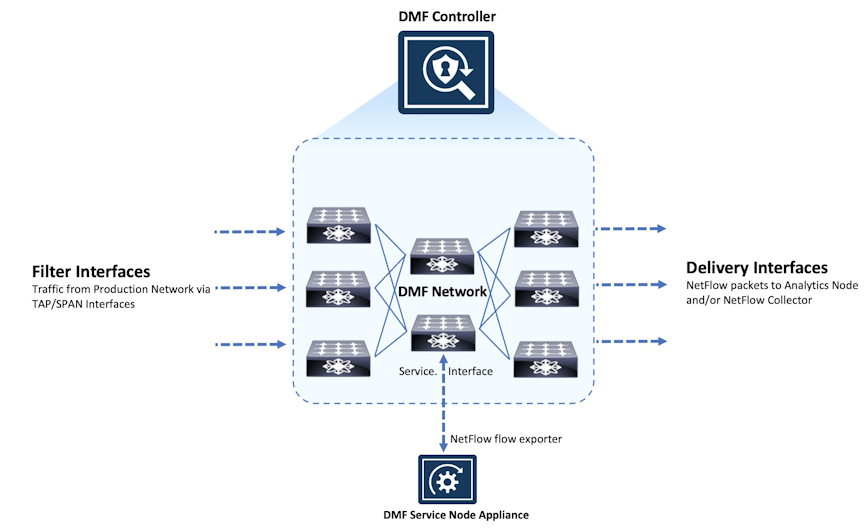

This chapter describes to configure the managed services provided by the DANZ Monitoring Fabric (DMF) Service Node Appliance.

Overview

The DANZ Monitoring Fabric (DMF) Service Node has multiple interfaces connected to traffic for processing and analysis. Each interface can be programmed independently to provide any supported managed-service actions.

To create a managed service, identify a switch interface connected to the service node, specify the service action, and configure the service action options.

Configure a DMF policy to use the managed service by name. This action causes the Controller to forward traffic the policy selects to the service node. The processed traffic is returned to the monitoring fabric using the same interface and sent to the tools (delivery interfaces) defined in the DMF policy.

If the traffic volume the policy selects is too much for a single service node interface, define an LAG on the switch connected to the service node, then use the LAG interface when defining the managed service. All service node interfaces connected to the LAG are configured to perform the same action. The traffic the policy selects is automatically load-balanced among the LAG member interfaces and distributes the return traffic similarly.

Changing the Service Node Default Configuration

config-service-node, enter the following command from config mode on the Active DMF controller:

controller-1(config)# service-node <service_node_alias>

controller-1(config-service-node)#Replace service_node_alias with the alias to use for the service node. This alias is affiliated with the hardware MAC address of the service node using the mac command. The hardware MAC address configuration is mandatory for the service node to interact with the DMF Controller.

config-service-node submode to override the default configuration for the associated service node:

- admin password: set the password to log in to the service node as an admin user.

- banner: set the service node pre-login banner message.

- description: set a brief description.

- logging: enable service node logging to the Controller.

- mac: configure a MAC address for the service node.

- ntp: configure the service node to override default parameters.

- snmp-server: configure an SNMP trap host to receive SNMP traps from the service node.

Using SNMP to Monitor DPDK Service Node Interfaces

interfaces MIB: ❵.1.3.6.1.2.1.2❵

ifMIBObjects MIB: ❵.1.3.6.1.2.1.31.1❵snmpget -v2c -c public 10.106.6.5 .1.3.6.1.2.1.31.1.1.1.6.105

IF-MIB::ifHCInOctets.105 = Counter64: 10008snmpget -v2c -c public 10.106.6.5 .1.3.6.1.2.1.31.1.1.1.10.105

IF-MIB::ifHCOutOctets.105 = Counter64: 42721[root@TestTool anet]# snmpwalk -v2c -c onlonl 10.106.6.6 .1.3.6.1.2.1.2.2.1.8.109

IF-MIB::ifOperStatus.109 = INTEGER: down(2)

[root@TestTool anet]# snmpwalk -v2c -c onlonl 10.106.6.6 .1.3.6.1.2.1.2.2.1.8.105

IF-MIB::ifOperStatus.105 = INTEGER: up(1)Configuring Managed Services

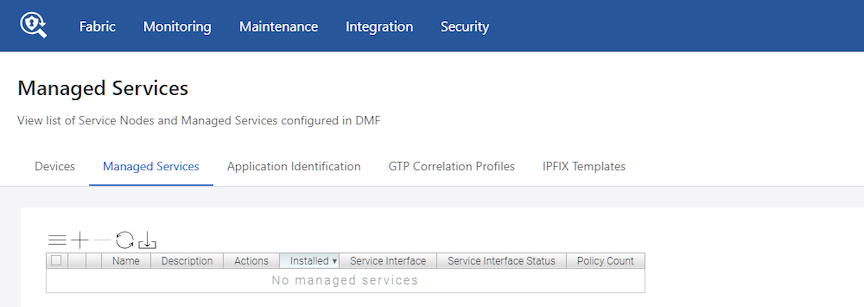

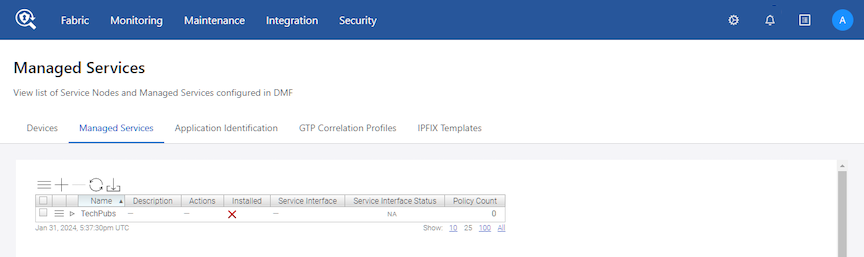

This page displays the service node appliance devices connected to the DMF Controller and the services configured on the Controller.

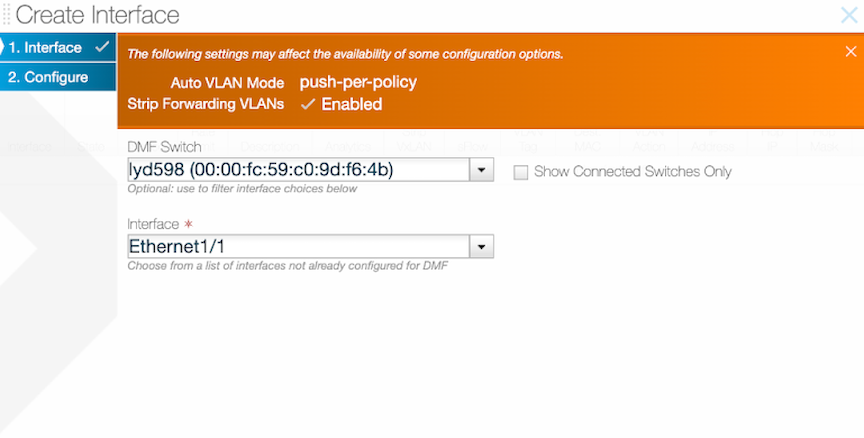

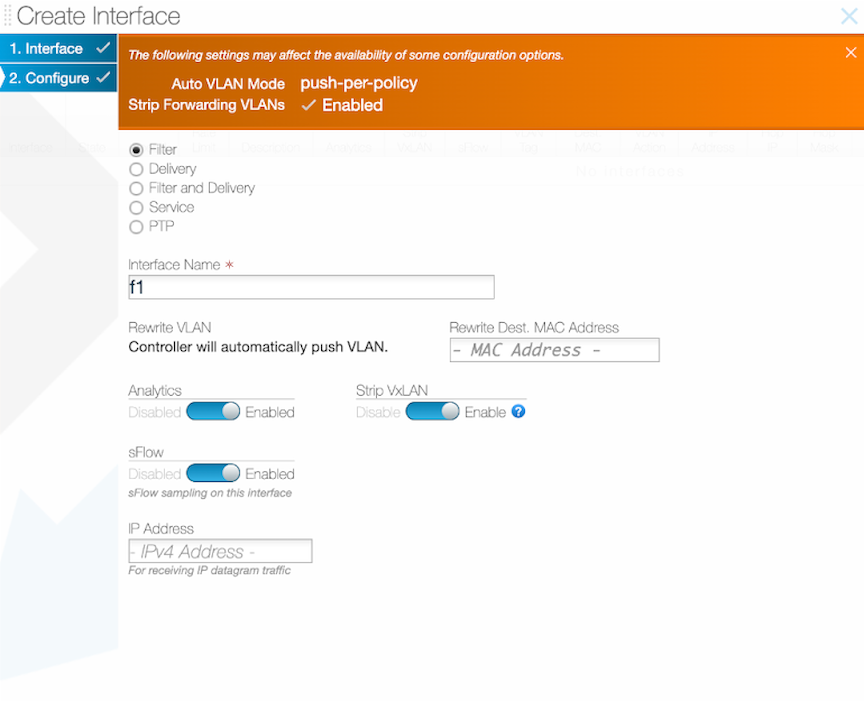

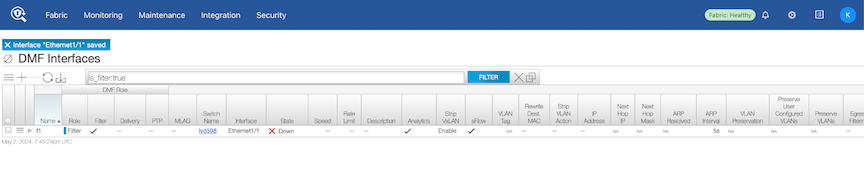

Using the GUI to Define a Managed Service

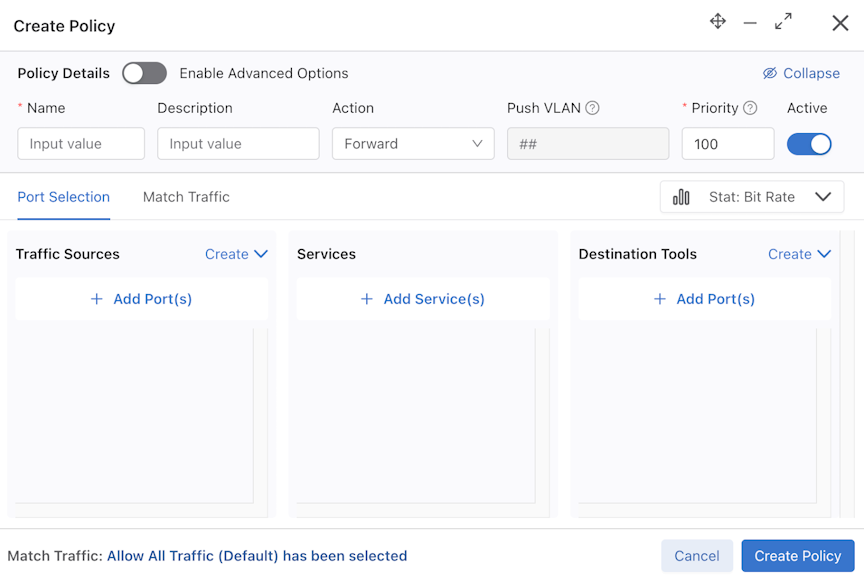

To create a new managed service, perform the following steps:

Using the CLI to Define a Managed Service

To configure a service to direct traffic to a DMF service node, complete the following steps:

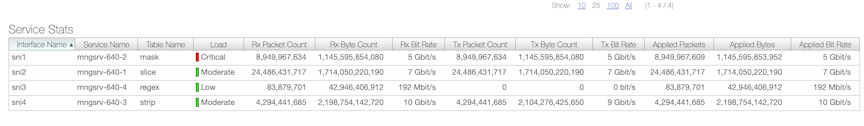

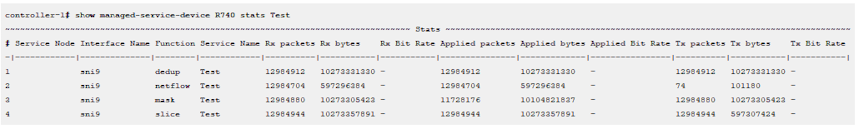

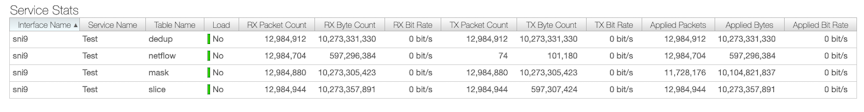

Monitoring Managed Services

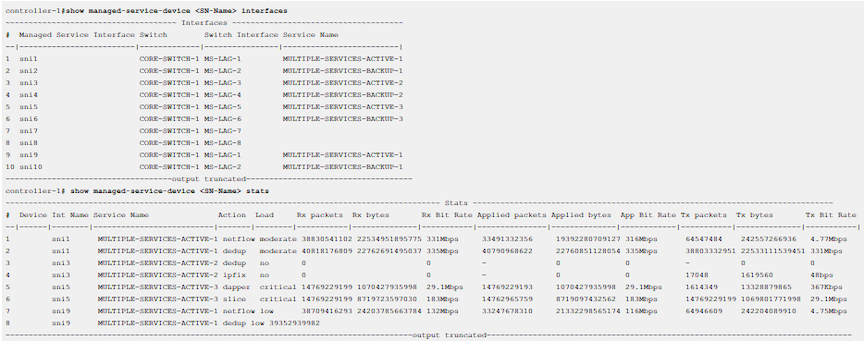

controller-1# show managed-service-device <SN-Name> interfaces

controller-1# show managed-service-device <SN-Name> statsFor example, the following command shows the managed services handled by the Service Node Interface (SNI):

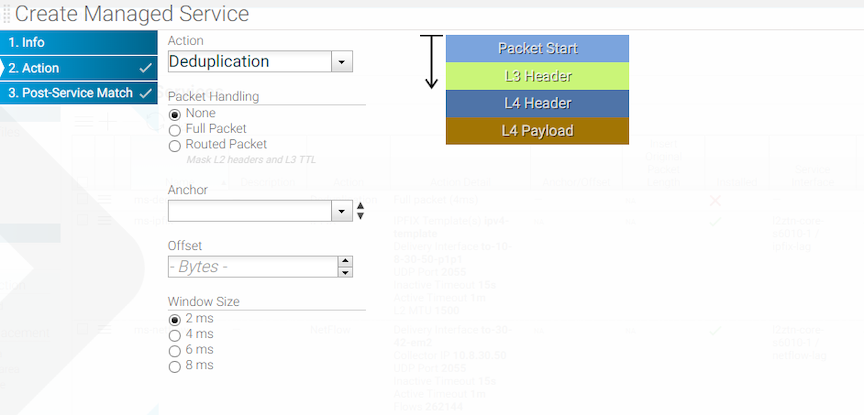

Deduplication Action

The DANZ Monitoring Fabric (DMF) Service Node enhances the efficiency of network monitoring tools by eliminating duplicate packets. Duplicate packets can be introduced into the out-of-band monitoring data stream by receiving the same flow from multiple TAP or SPAN ports spread across the production network. Deduplication eliminates these duplicate packets and enables more efficient use of passive monitoring tools.

- Full packet deduplication: deduplicates incoming packets that are identical at the L2/L3/L4 layers.

- Routed packet deduplication: as packets traverse an IP network, the MAC address changes from hop to hop. Routed packet deduplication enables users to match packet contents starting from the L3 header.

- NATed packet deduplication: to perform NATed deduplication, the service node compares packets in the configured window that are identical starting from the L4 payload. To use NATed packet deduplication, perform the following fields as required:

- Anchor: Packet Start, L2 Header Start, L3 Header Start, or L3 Payload Start fields.

- Offset: the number of bytes from the anchor where the deduplication check begins.

The time window in which the service looks for duplicate packets is configurable. Select a value among these choices: 2ms (the default), 4ms, 6ms, and 8ms.

GUI Configuration

CLI Configuration

Controller-1(config)# show running-config managed-service MS-DEDUP-FULL-PACKET

! managed-service

managed-service MS-DEDUP-FULL-PACKET

description 'This is a service that does Full Packet Deduplication'

1 dedup full-packet window 8

service-interface switch CORE-SWITCH-1 ethernet13/1

Controller-1(config)#Controller-1(config)# show running-config managed-service MS-DEDUP-ROUTED-PACKET

! managed-service

managed-service MS-DEDUP-ROUTED-PACKET

description 'This is a service that does Routed Packet Deduplication'

1 dedup routed-packet window 8

service-interface switch CORE-SWITCH-1 ethernet13/2

Controller-1(config)#Controller-1(config)# show running-config managed-service MS-DEDUP-NATTED-PACKET

! managed-service

managed-service MS-DEDUP-NATTED-PACKET

description 'This is a service that does Natted Packet Deduplication'

1 dedup anchor-offset l4-payload-start 0 window 8

service-interface switch CORE-SWITCH-1 ethernet13/3

Controller-1(config)#show managed-service-device

<Service-Node-Name> stats <dedup-service-name> dedup

Controller-1(config)# show managed-service-device DMF-SN-R740-1 stats MS-DEDUP dedup

~~~~~~~~~~~~~~~~ Stats ~~~~~~~~~~~~~~~~

Interface Name : sni16

Function : dedup

Service Name : MS-DEDUP

Rx packets : 9924950

Rx bytes : 4216466684

Rx Bit Rate : 1.40Gbps

Applied packets : 9923032

Applied bytes : 4216337540

Applied Bit Rate : 1.40Gbps

Tx packets : 9796381

Tx bytes : 4207106113

Tx Bit Rate : 1.39Gbps

Deduped frame count : 126651

Deduped percent : 1.2763336851075358

Load : low

Controller-1(config)#Header Strip Action

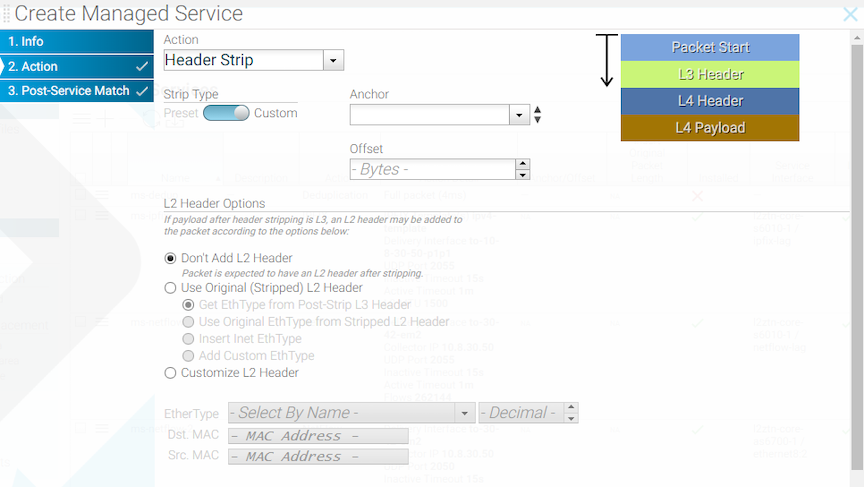

This action removes specific headers from the traffic selected by the associated DANZ Monitoring Fabric (DMF) policy. Alternatively, define custom header stripping based on the starting position of the Layer-3 header, the Layer-4 header, the Layer-4 payload, or the first byte in the packet.

- decap-erspan: remove the Encapsulated Remote Switch Port Analyzer (ERSPAN) header.

- decap-cisco-fabric-path: remove the Cisco FabricPath protocol header.

- decap-l3-mpls: remove the Layer-3 Multi-protocol Label Switching (MPLS) header.

- decap-lisp: remove the LISP header.

- decap-vxlan [udp-port vxlan port]: remove the Virtual Extensible LAN (VXLAN) header.

- decap-geneve: remove the Geneve header.

- l3-header-start

- l4-header-start

- l4-payload-start

- packet-start

Input a positive integer representing the offset from which the strip action begins. When omitting an offset, the header stripping starts from the first byte in the packet.

GUI Configuration

After assigning the required actions to the header stripping service, click Next or Post-Service Match.

CLI Configuration

The header-strip service action strips the header and replaces it in one of the following ways:

- Add the original L2 src-mac, and dst-mac.

- Add the original L2 src-mac, dst-mac, and ether-type.

- Specify and adda custom src-mac, dst-mac, and ether-type.

The following are examples of custom header stripping:

! managed-service

managed-service MS-HEADER-STRIP-1

1 header-strip packet-start 20 add-original-l2-dstmac-srcmac

service-interface switch CORE-SWITCH-1 ethernet13/1! managed-service

managed-service MS-HEADER-STRIP-2

1 header-strip packet-start 20 add-original-l2-dstmac-srcmac-ethertype

service-interface switch CORE-SWITCH-1 ethernet13/2! managed-service

managed-service MS-HEADER-STRIP-3

1 header-strip packet-start 20 add-custom-l2-header 00:11:01:02:03:04 00:12:01:02:03:04

0x800

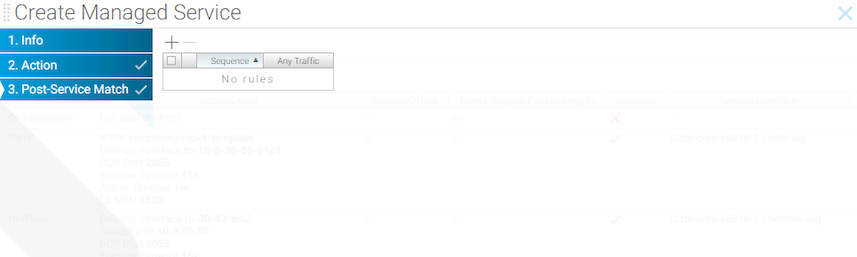

service-interface switch CORE-SWITCH-1 ethernet13/3Configuring the Post-service Match

- The fabric can remain in L3/L4 mode. It is not necessary to change to offset match mode.

- Easier configuration.

- All match conditions are available for the inner packet.

- The policy requires only one managed service to perform the strip service action.

! managed-service

managed-service MS-HEADER-STRIP-4

service-interface switch CORE-SWITCH-1 interface ethernet1

1 decap-l3-mpls

!

post-service-match

1 match ip src-ip 1.1.1.1

2 match tcp dst-ip 2.2.2.0 255.255.255.0

! policy

policy POLICY-1

filter-interface TAP-1

delivery-interface TOOL-1

use-managed-service MS-HEADER-STRIP-4 sequence 1IPFIX and Netflow Actions

IP Flow Information Export (IP FIX), also known as NetFlow v10, is an IETF standard defined in RFC 7011. The IPFIX generator (agent) gathers and transmits information about flows, which are sets of packets that contain all the keys specified by the IPFIX template. The generator observes the packets received in each flow and forwards the information to the IPFIX collector (server) in the form as a flowset.

Starting with the DANZ Monitoring Fabric (DMF)-7.1.0 release, NetFlow v9 (Cisco proprietary) and IPFIX/NetFlow v10 are both supported. Configuration of the IPFIX managed service is similar to configuration for earlier versions of NetFlow except for the UDP port definition. NetFlow v5 collectors typically listen over UDP port 2055, while IFPIX collectors listen over UDP port 4739.

NetFlow records are typically exported using User Datagram Protocol (UDP) and collected using a flow collector. For a NetFlow service, the service node takes incoming traffic and generates NetFlow records. The service node drops the original packets, and the generated flow records, containing metadata about each flow, are forwarded out of the service node interface.

IPFIX Template

The IPXIF template consists of the key element IDs representing IP flow, field element IDs representing actions the exporter has to perform over IP flows matching key element IDs, the template ID number for uniqueness, collector information, and eviction timers.

To define a template, configure keys of interest representing the IP flow and fields that identify the values measured by the exporter, the exporter information, and the eviction timers. To define the template, select the option from the DANZ Monitoring Fabric (DMF) GUI or enter the ipfix-template template-name command in config mode, replacing template-name with a unique identifier for the template instance.

IPFIX Keys

- destination-ipv4-address

- destination-ipv6-address

- destination-mac-address

- destination-transport-port

- dot1q-priority

- dot1q-vlan-id

- ethernet-type

- icmp-type-code-ipv4

- icmp-type-code-ipv6

- ip-class-of-service

- ip-diff-serv-code-point

- ip-protocol-identifier

- ip-ttl

- ip-version

- policy-vlan-id

- records-per-dmf-interface

- source-ipv4-address

- source-ipv6-address

- source-mac-address

- source-transport-port

- vlan id

- The Controller will not allow the key combination of source-mac-address and records-per-dmf-interface in push-per-policy mode.

- The Controller will not allow the key combinations of policy-vlan-id and records-per-dmf-interface in push-per-filter mode.

IPFIX Fields

A field defines each value updated for the packets the generator receives that match the specified keys. For example, include fields in the template to record the number of packets, the largest and smallest packet sizes, or the start and end times of the flows. To see a listing of the fields supported in the current release of the DANZ Monitoring Fabric (DMF) Service Node, select the option from the DMF GUI, or type help in config-ipxif-template submode. The following are the fields supported:

- flow-end-milliseconds

- flow-end-reason

- flow-end-seconds

- flow-start-milliseconds

- flow-start-seconds

- maximum-ip-total-length

- maximum-layer2-total-length

- maximum-ttl

- minimum-ip-total-length

- minimum-layer2-total-length

- minimum-ttl

- octet-delta-count

- packet-delta-count

- tcp-control-bits

Active and Inactive Timers

After the number of minutes specified by the active timer, the flow set is closed and forwarded to the IPFIX collector. The default active timer is one minute. During the number of seconds set by the inactive timer, if no packets that match the flow definition are received, the flow set is closed and forwarded without waiting for the active timer to expire. The default value for the inactive time is 15 seconds.

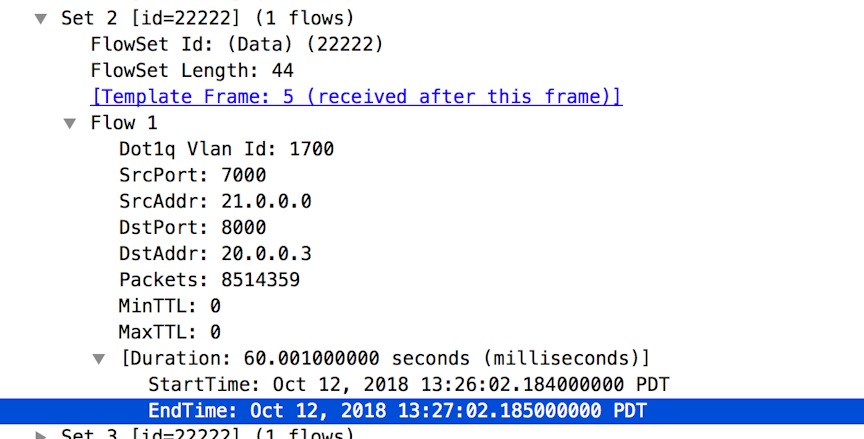

Example Flowset

The following is a running-config that shows the IPFIX template used to generate this flowset.

Example IPFIX Template

! ipfix-template

ipfix-template Perf-temp

template-id 22222

key destination-ipv4-address

key destination-transport-port

key dot1q-vlan-id

key source-ipv4-address

key source-transport-port

field flow-end-milliseconds

field flow-end-reason

field flow-start-milliseconds

field maximum-ttl

field minimum-ttl

field packet-delta-countUsing the GUI to Define an IPFIX Template

Using the CLI to Define an IPFIX Template

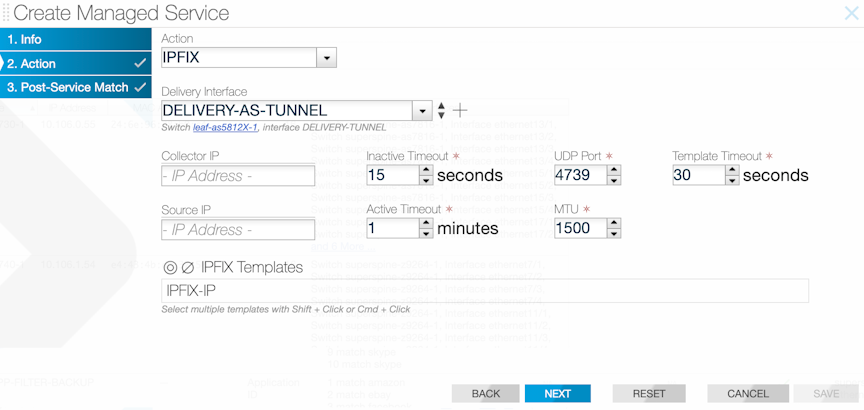

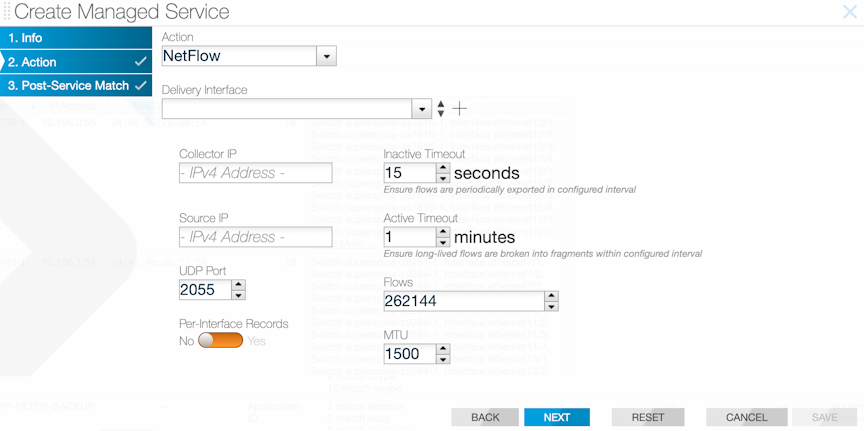

Using the GUI to Define an IPFIX Service Action

Select IPFIX from the Action selection list on the page.

- Assign a delivery interface.

- Configure the collector IP address.

- Identify the IPFIX template.

- Inactive timeout: the interval of inactivity that marks a flow inactive.

- Active timeout: length of time between each IPFIX flows for a specific flow.

- Source IP: source address to use for the IPFIX flowsets.

- UDP port: UDP port to use for sending IPFIX flowsets.

- MTU: MTU to use for sending IPFIX flowsets.

After completing the configuration, click Next, and then click Save.

Using the CLI to Define an IPFIX Service Action

controller(config)# managed-service MS-IPFIX-SERVICE

controller(config-managed-srv)# 1 ipfix TO-DELIVERY-INTERFACE

controller(config-managed-srv-ipfix)# collector 10.106.1.60

controller(config-managed-srv-ipfix)# template IPFIX-TEMPLATEThe active-timeout and inactive-timeout commands are optional

controller1# show running-config managed-service MS-IPFIX-ACTIVE

! managed-service

managed-service MS-IPFIX-ACTIVE

service-interface switch CORE-SWITCH-1 ethernet13/1

!

1 ipfix TO-DELIVERY-INTERFACE

collector 10.106.1.60

template IPFIX-TEMPLATEconfig# show running-config ipfix-template

! ipfix-template

ipfix-template IPFIX-IP

template-id 1974

key destination-ipv4-address

key destination-ipv6-address

key ethernet-type

key source-ipv4-address

key source-ipv6-address

field flow-end-milliseconds

field flow-end-reason

field flow-start-milliseconds

field minimum-ttl

field tcp-control-bits

------------------------output truncated------------------------Records Per Interface Netflow using DST-MAC Rewrite

Destination MAC rewrite for the records-per-interface NetFlow and IPFIX feature is the default setting and applies to switches running Extensible Operating System (EOS) and SWL and is supported on all platforms.

A configuration option exists for using src-mac when overwriting the dst-mac isn't preferred.

Configurations using the CLI

Global Configuration

records-per-interface. The following example illustrates using rewrite-src-mac or rewrite-dst-mac in conjunction with the filter-mac-rewrite command.

c1(config)# filter-mac-rewrite rewrite-src-mac

c1(config)# filter-mac-rewrite rewrite-dst-macNetflow Configuration

c1(config)# managed-service ms1

c1(config-managed-srv)# 1 netflow

c1(config-managed-srv-netflow)# collector 213.1.1.20 udp-port 2055 mtu 1024 records-per-interfaceIPFIX Configuration

c1(config)# ipfix-template i1

c1(config-ipfix-template)# field maximum-ttl

c1(config-ipfix-template)# key records-per-dmf-interface

c1(config-ipfix-template)# template-id 300

c1(config)# managed-service ms1

c1(config-managed-srv)# 1 ipfix

c1(config-managed-srv-ipfix)# template i1Show Commands

NetFlow Show Commands

show running-config managed-service command to view the NetFlow settings.

c1(config)# show running-config managed-service

! managed-service

managed-service ms1

!

1 netflow

collector 213.1.1.20 udp-port 2055 mtu 1024 records-per-interfaceIPFIX Show Commands

show ipfix-template i1 command to view the IPFIX settings.

c1(config)# show ipfix-template i1

~~~~~~~~~~~~~~~~~~ Ipfix-templates~~~~~~~~~~~~~~~~~~

# Template Name KeysFields

-|-------------|-------------------------|-----------|

1 i1records-per-dmf-interface maximum-ttl

c1(config)# show running-config managed-service

! managed-service

managed-service ms1

!

1 ipfix

template i1Limitations

-

The

filter-mac-rewrite rewrite-src-maccommand cannot be used on thefilter interfacethat is part of the policy usingtimestamping replace-src-mac. However, the command works when using atimestamping add-header-after-l2configuration.

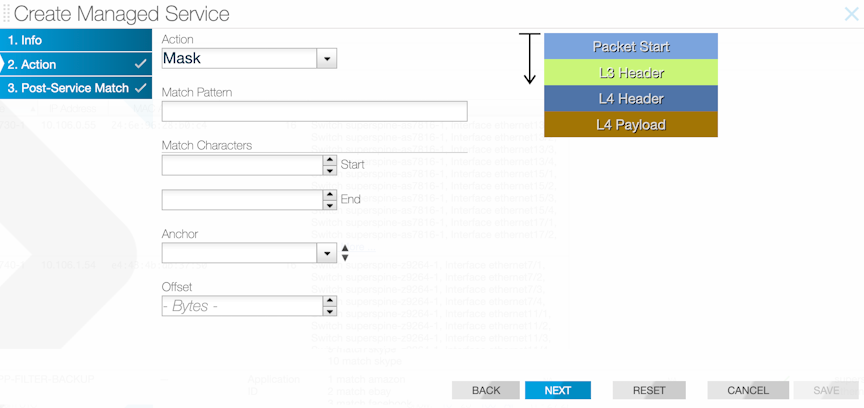

Packet-masking Action

The packet-masking action can hide specific characters in a packet, such as a password or credit card number, based on offsets from different anchors and by matching characters using regular (regex) expressions.

The mask service action applies the specified mask to the matched packet region.

GUI Configuration

CLI Configuration

Controller-1(config)# show running-config managed-service MS-PACKET-MASK

! managed-service

managed-service MS-PACKET-MASK

description "This service masks pattern matching an email address in payload with X"

1 mask ([a-zA-Z0-9._-]+@[a-zA-Z0-9._-]+.[a-zA-Z0-9_-]+)

service-interface switch CORE-SWITCH-1 ethernet13/1Arista Analytics Node Capability

Arista Analytics Node capabilities are enhanced to handle NetFlow V5/V9 and IPFIX Packets. All these flow data are represented with the Netflow index.

NetFlow records are exported using User Datagram Protocol (UDP) to one or more specified NetFlow collectors. Use the DMF Service Node to configure the NetFlow collector IP address and the destination UDP port. The default UDP port is 2055.

Configuring the Arista Analytics Node Using the GUI

From the Arista Analytics Node dashboard, apply filter rules to display specific flow information.

- Delivery interface: interface to use for delivering NetFlow records to collectors.

Note: The next-hop address must be resolved for the service to be active.

- Collector IP: identify the NetFlow collector IP address.

- Inactive timeout: use the inactive-timeout command to configure the interval of inactivity before NetFlow times out. The default is 15 seconds.

- Source IP: specify a source IP address to use as the source of the NetFlow packets.

- Active timeout: use active timeout to configure a period that a NetFlow can be generated continuously before it is automatically terminated. The default is one minute.

- UDP port: change the UDP port number used for the NetFlow packets. The default is 2055.

- Flows: specify the maximum number of NetFlow packets allowed. The allowed range is 32768 to 1048576. The default is 262144.

- Per-interface records: identify the filter interface where the NetFlow packets were originally received. This information can be used to identify the hop-by-hop path from the filter interface to the NetFlow collector.

- MTU: change the Maximum Transmission Unit (MTU) used for NetFlow packets.

Configuring the Arista Analytics Node Using the CLI

Enter the 1 netflow command and identify the configuration name and the submode changes to the config-managed-srv-netflow mode for viewing and configuring a specific NetFlow configuration.

The DMF Service Node replicates NetFlow packets received without changing the source IP address. Packets that do not match the specified destination IP address and packets that are not IPv4 or UDP are passed through. To configure a NetFlow-managed service, perform the following steps:

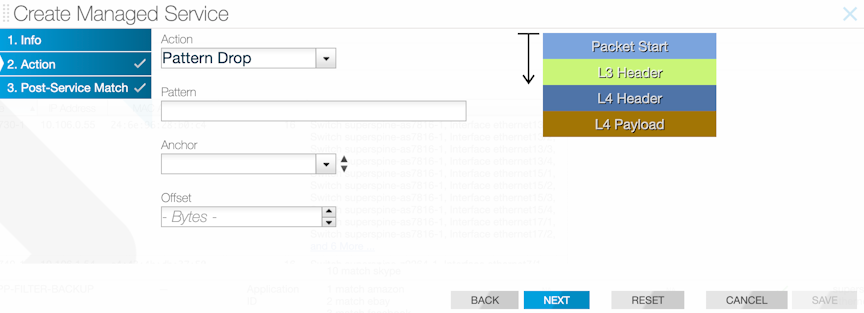

Pattern-drop Action

The pattern-drop service action drops matching traffic.

- URLs and user agents in the HTTP header

- patterns in BitTorrent packets

- encapsulation headers for specific parameters, including GTP, VXLAN, and VN-Tag

- subscriber device IP (user-endpoint IP)

Pattern matching allows Session-aware Adaptive Packet Filtering (SAPF) to identify HTTPS transactions on non-standard SSL ports. It can filter custom applications and separate control traffic from user data traffic.

Pattern matching is also helpful in enforcing IT policies, such as identifying hosts using unsupported operating systems or dropping unsupported traffic. For example, the Windows OS version can be identified and filtered based on the user-agent field in the HTTP header. The user-agent field may appear at variable offsets, so a regular expression search is used to identify the specified value wherever it occurs in the packet.

GUI Configuration

CLI Configuration

Controller-1(config)# show running-config managed-service MS-PACKET-MASK

! managed-service

managed-service MS-PACKET-MASK

description "This service drops traffic that has an email address in its payload"

1 pattern-drop ([a-zA-Z0-9._-]+@[a-zA-Z0-9._-]+.[a-zA-Z0-9_-]+)

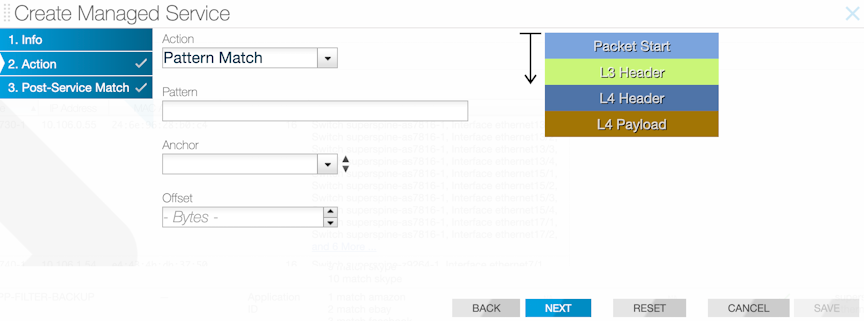

service-interface switch CORE-SWITCH-1 ethernet13/1Pattern-match Action

The pattern-match service action matches and forwards matching traffic and is similar to the pattern-drop service action.

- URLs and user agents in the HTTP header

- patterns in BitTorrent packets

- encapsulation headers for specific parameters including, GTP, VXLAN, and VN-Tag

- subscriber device IP (user-endpoint IP)

- Pattern matching allows Session Aware Adaptive Packet Filtering and can identify HTTPS transactions on non-standard SSL ports. It can filter custom applications and can separate control traffic from user data traffic.

Pattern matching allows Session-aware Adaptive Packet Filtering (SAPF) to identify HTTPS transactions on non-standard SSL ports. It can filter custom applications and separate control traffic from user data traffic.

Pattern matching is also helpful in enforcing IT policies, such as identifying hosts using unsupported operating systems or dropping unsupported traffic. For example, the Windows OS version can be identified and filtered based on the user-agent field in the HTTP header. The user-agent field may appear at variable offsets, so a regular expression search is used to identify the specified value wherever it occurs in the packet.

GUI Configuration

CLI Configuration

Use the pattern-match pattern keyword to enable the pattern-matching service action. Specify the pattern to match for packets to submit to the packet slicing operation.

Windows NT

5.(0-1) anywhere in the packet and delivers the packets to the delivery interface TOOL-PORT-TO-WIRESHARK-1. This service is optional and is applied to TCP traffic to destination port 80.

! managed-service

managed-service MS-PATTERN-MATCH

description 'regular expression filtering'

1 pattern-match 'Windows\\sNT\\s5\\.[0-1]'

service-interface switch CORE-SWITCH-1 ethernet13/1

! policy

policy PATTERN-MATCH

action forward

delivery-interface TOOL-PORT-TO-WIRESHARK-1

description 'match regular expression pattern'

filter-interface TAP-INTF-FROM-PRODUCTION

priority 100

use-managed-service MS-PATTERN-MATCH sequence 1 optional

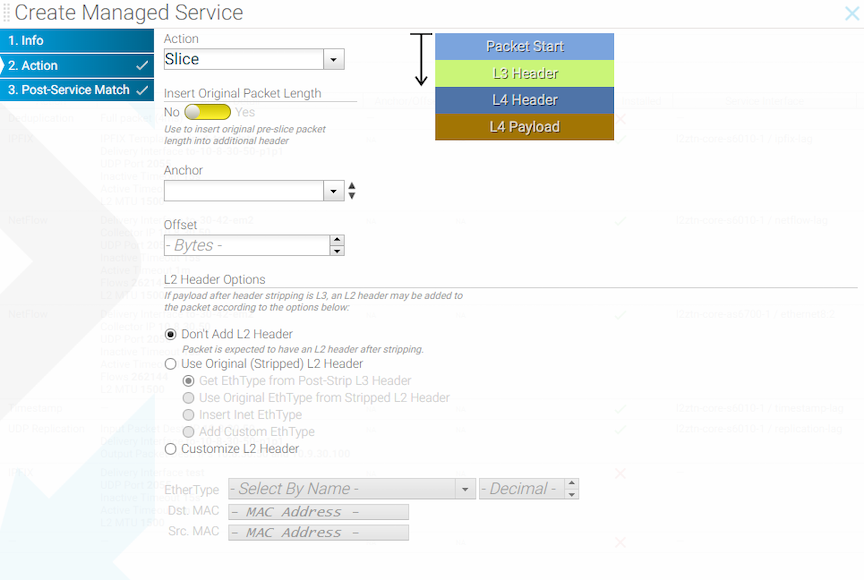

1 match tcp dst-port 80Slice Action

- Packet start

- L3 header start

- L4 header start

- L4 payload start

GUI Configuration

This page allows inserting an additional header containing the original header length.

CLI Configuration

! managed-service

managed-service my-service-name

1 slice l3-header-start 20 insert-original-packet-length

service-interface switch DMF-CORE-SWITCH-1 ethernet20/1! managed-service

managed-service MS-SLICE-1

description 'slicing service'

1 slice l4-payload-start 1

service-interface switch DMF-CORE-SWITCH-1 ethernet40/1

! policy

policy slicing-policy

action forward

delivery-interface TOOL-PORT-TO-WIRESHARK-1

description 'remove payload'

filter-interface TAP-INTF-FROM-PRODUCTION

priority 100

use-managed-service MS-SLICE-1 sequence 1 optional

1 match tcp dst-ip 10.2.19.119 255.255.255.255 src-port 80Packet Slicing on the 7280 Switch

This feature removes unwanted or unneeded bytes from a packet at a configurable byte position (offset). This approach is beneficial when the data of interest is situated within the headers or early in the packet payload. This action reduces the volume of the monitoring stream, particularly in cases where payload data is not necessary.

Another use case for packet slicing (slice action) can be removing payload data to ensure compliance with the captured traffic.

Within the DANZ Monitoring Fabric (DMF) fabric, two types of slice-managed services (packet slicing service) now exist. These types are distinguished based on whether installing the service on a service node or on an interface of a supported switch. The scope of this document is limited to the slice-managed service configured on a switch. The managed service interface is the switch interface used to configure this service.

All DMF 8.4 and above compatible 7280 switches support this feature. Use the show switch all property command to check which switch in DMF fabric supports this feature. The feature is supported if the Min

Truncate Offset and Max Truncate Offset properties have a non-zero value.

# show switch all property

# Switch Min Truncate Offset...Max Truncate Offset

-|------|-------------------| ... |---------------------------------

1 7280 100...9236

2 core1 ... Using the CLI to Configure Packet Slicing - 7280 Switch

slice-managed service on a switch using the following steps.Key points to consider while configuring the slice action on a supported switch:

- Only the

packet-startanchor is supported. - Ensure the offset is within the Min/Max truncate size bounds reported by the

show switch all propertycommand. If the configured value is beyond the bound, then DMF chooses the closest value of the range.For example, if a user configures the offset as 64, and the min truncate offset reported by switch properties is 100, then the offset used is 100. If the configured offset is 10,000 and the max truncate offset reported by the switch properties is 9236, then the offset used is 9236.

- A configured offset for slice-managed service includes FCS when programmed on a switch interface, which means an offset of 100 will result in a packet size of 96 bytes (accounting for 4-byte FCS).

- Configuring an offset below 17 is not allowed.

- The same service interface cannot chain multiple managed services.

- The insert-original-packet-length option is not applicable for switch-based slice-managed service.

CLI Show Commands

Use the show policy policy name command to see the runtime state of a policy using the slice-managed service. The command shows the service interface information and stats.

Controller# show policy packet-slicing-policy

Policy Name: packet-slicing-policy

Config Status: active - forward

Runtime Status : installed

Detailed Status: installed - installed to forward

Priority : 100

Overlap Priority : 0

# of switches with filter interfaces : 1

# of switches with delivery interfaces : 1

# of switches with service interfaces: 1

# of filter interfaces : 1

# of delivery interfaces : 1

# of core interfaces : 0

# of services: 1

# of pre service interfaces: 1

# of post service interfaces : 1

Push VLAN: 1

Post Match Filter Traffic: -

Total Delivery Rate: -

Total Pre Service Rate : -

Total Post Service Rate: -

Overlapping Policies : none

Component Policies : none

Runtime Service Names: packet-slicing-7280

Installed Time : 2023-08-09 19:00:40 UTC

Installed Duration : 1 hour, 17 minutes

~ Match Rules ~

# Rule

-|-----------|

1 1 match any

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Filter Interface(s) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

# DMF IF Switch IF Name State Dir Packets Bytes Pkt Rate Bit Rate Counter Reset Time

-|------|------|-----------|-----|---|-------|-----|--------|--------|------------------------------|

1 f1 7280 Ethernet2/1 uprx0 0 0-2023-08-09 19:00:40.305000 UTC

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Delivery Interface(s) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

# DMF IF Switch IF Name State Dir Packets Bytes Pkt Rate Bit Rate Counter Reset Time

-|------|------|-----------|-----|---|-------|-----|--------|--------|------------------------------|

1 d1 7280 Ethernet3/1 uptx0 0 0-2023-08-09 19:00:40.306000 UTC

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Service Interface(s) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

# Service nameRole Switch IF NameState Dir Packets Bytes Pkt Rate Bit Rate Counter Reset Time

-|-------------------|----|------|------------|-----|---|-------|-----|--------|--------|------------------------------|

1 packet-slicing-7280 pre7280 Ethernet10/1 uptx0 0 0-2023-08-09 19:00:40.305000 UTC

2 packet-slicing-7280 post 7280 Ethernet10/1 uprx0 0 0-2023-08-09 19:00:40.306000 UTC

~ Core Interface(s) ~

None.

~ Failed Path(s) ~

None.Use the show managed-services command to view the status of all the managed services, including the packet-slicing managed service on a switch.

Controller# show managed-services

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Managed-services ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

# Service NameSwitch Switch Interface Installed Max Post-Service BW Max Pre-Service BW Total Post-Service BW Total Pre-Service BW

-|-------------------|------|----------------|---------|-------------------|------------------|---------------------|--------------------|

1 packet-slicing-7280 7280 Ethernet10/1 True400Gbps 400Gbps80bps 80bps

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Actions of Service Names ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

# Service NameSequence Service Action Slice Anchor Insert original packet length Slice Offset

-|-------------------|--------|--------------|------------|-----------------------------|------------|

1 packet-slicing-7280 1slicepacket-start False 101

Using the GUI to Configure Packet Slicing - 7820 Switch

Managed Service Configuration

The managed service interface used for slice action must be in MAC loopback mode.

Troubleshooting Packet Slicing

The show switch all property command provides upper and lower bounds of packet slicing action’s offset. If bounds are present, the feature is supported; otherwise, the switch does not support the packet slicing feature.

The show fabric errors managed-service-error command provides information when DANZ Monitoring Fabric (DMF) fails to install a configured packet slicing managed service on a switch.

- The managed service interface is down.

- More than one action is configured on a managed service interface of the switch.

- The managed service interface on a switch is neither a physical interface nor a LAG port.

- A non-slice managed service is configured on a managed service interface of a switch.

- The switch does not support packet slicing managed service, and its interface is configured with slice action.

- Slice action configured on a switch interface is not using a packet-start anchor.

- The managed service interface is not in MAC loopback mode.

Use the following commands to troubleshoot packet-slicing issues.

Controller# show fabric errors managed-service-error

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Managed Service related error~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

# Error Service Name

-|---------------------------------------------------------------------------------------------------------------------------------------------|-------------------|

1 Pre-service interface 7280-Ethernet10/1-to-managed-service on switch 7280 is inactive; Service interface Ethernet10/1 on switch 7280 is downpacket-slicing-7280

2 Post-service interface 7280-Ethernet10/1-to-managed-service on switch 7280 is inactive; Service interface Ethernet10/1 on switch 7280 is down packet-slicing-7280The show switch switch name interface interface

name dmf-stats command provides Rx and Tx rate information for the managed service interface.

Controller# show switch 7280 interface Ethernet10/1 dmf-stats

# Switch DPID Name State Rx Rate Pkt Rate Peak Rate Peak Pkt Rate TX Rate Pkt Rate Peak Rate Peak Pkt Rate Pkt Drop Rate

-|-----------|------------|-----|-------|--------|---------|-------------|-------|--------|---------|-------------|-------------|

1 7280Ethernet10/1 down- 0128bps0 - 0128bps0 0The show switch switch name interface interface

name stats command provides Rx and Tx counter information for the managed service interface.

Controller# show switch 7280 interface Ethernet10/1 stats

# Name Rx Pkts Rx Bytes Rx Drop Tx Pkts Tx Bytes Tx Drop

-|------------|-------|--------|-------|-------|--------|-------|

1 Ethernet10/1 22843477 0 5140845937 0Considerations

- Managed service action chaining is not supported when using a switch interface as a managed service interface.

- When configured for a supported switch, the managed service interface for slice action can only be a physical interface or a LAG.

- When using packet slicing managed service, packets ingressing on the managed service interface are not counted in the ingress interface counters, affecting the output of the

show switch switch name interface interface name statsandshow switch switch name interface interface name dmf-statscommands. This issue does not impact byte counters; all byte counters will show the original packet size, not the truncated size.

VXLAN Stripping on the 7280R3 Switch

Virtual Extensible LAN Header Stripping

Virtual Extensible LAN (VXLAN) Header Stripping supports the delivery of decapsulated packets to tools and devices in a DANZ Monitoring Fabric (DMF) fabric. This feature removes the VXLAN header, previously established in a tunnel for reaching the TAP Aggregation switch or inherent to the tapped traffic within the DMF. Within the fabric, DMF supports the installation of the strip VXLAN service on a filter interface or a filter-and-delivery interface of a supported switch.

Platform Compatibility

For DMF deployments, the target platform is DCS-7280R3.

Use the show switch

all property command to verify which switch in the DMF fabric supports this feature.

Strip Header

Supported property has the value BSN_STRIP_HEADER_CAPS_VXLAN.

# show switch all property

#: 1

Switch : lyd599

Max Phys Port: 1000000

Min Lag Port : 1000001

Max Lag Port : 1000256

Min Tunnel Port: 15000001

Max Tunnel Port: 15001024

Max Lag Comps: 64

Tunnel Supported : BSN_TUNNEL_L2GRE

UDF Supported: BSN_UDF_6X2_BYTES

Enhanced Hash Supported: BSN_ENHANCED_HASH_L2GRE,BSN_ENHANCED_HASH_L3,BSN_ENHANCED_HASH_L2,

BSN_ENHANCED_HASH_MPLS,BSN_ENHANCED_HASH_SYMMETRIC

Strip Header Supported : BSN_STRIP_HEADER_CAPS_VXLAN

Min Rate Limit : 1Mbps

Max Multicast Replication Groups : 0

Max Multicast Replication Entries: 0

PTP Timestamp Supported Capabilities : ptp-timestamp-cap-replace-smac, ptp-timestamp-cap-header-64bit,

ptp-timestamp-cap-header-48bit, ptp-timestamp-cap-flow-based,

ptp-timestamp-cap-add-header-after-l2

Min Truncate Offset: 100

Max Truncate Offset: 9236Using the CLI to Configure VXLAN Header Stripping

Configuration

Use the following steps to configure strip-vxlan on a switch:

- Set the optional field

strip-vxlan-udp-portat switch configuration, and the defaultudp-portforstrip-vxlanis4789. - Enable or disable

strip-vxlanon afilterorboth-filter-and-delivery interfaceusing therole both-filter-and-delivery interface-name filter-interface strip-vxlancommand.> enable # config (config)# switch switch-name (config-switch)# strip-vxlan-udp-port udp-port-number (config-switch)# interface interface-name (config-switch-if)# role both-filter-and-delivery interface-name filter-interface strip-vxlan (config-switch-if)# role both-filter-and-delivery interface-name filter-interface no-strip-vxlan (config)# show running-config - After enabling a filter interface with

strip-vxlan, any policy can use it. From theconfig-policysubmode, add thefilter-interfaceto the policy:(config)# policy p1 (config-policy)# filter-interface filter-interface

Show Commands

show policy policy name command to see the runtime state of a policy using a filter interface with strip-vxlan configured. It will also show the service interface information and stats.

# show policy strip-vxlan

Policy Name: strip-vxlan

Config Status: active - forward

Runtime Status : installed

Detailed Status: installed - installed to forward

Priority : 100

Overlap Priority : 0

# of switches with filter interfaces : 1

# of switches with delivery interfaces : 1

# of switches with service interfaces: 0

# of filter interfaces : 1

# of delivery interfaces : 1

# of core interfaces : 0

# of services: 0

# of pre service interfaces: 0

# of post service interfaces : 0

Push VLAN: 1

Post Match Filter Traffic: -

Total Delivery Rate: -

Total Pre Service Rate : -

Total Post Service Rate: -

Overlapping Policies : none

Component Policies : none

Installed Time : 2024-05-02 19:54:27 UTC

Installed Duration : 1 minute, 18 secs

Timestamping enabled : False

~ Match Rules ~

# Rule

-|-----------|

1 1 match any

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Filter Interface(s) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

# DMF IF Switch IF Name State Dir Packets Bytes Pkt Rate Bit Rate Counter Reset Time

-|------|------|-----------|-----|---|-------|-----|--------|--------|------------------------------|

1 f1 lyd598 Ethernet1/1 uprx0 0 0-2024-05-02 19:54:27.141000 UTC

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Delivery Interface(s) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

# DMF IF Switch IF Name State Dir Packets Bytes Pkt Rate Bit Rate Counter Reset Time

-|------|------|-----------|-----|---|-------|-----|--------|--------|------------------------------|

1 d1 lyd598 Ethernet2/1 uptx0 0 0-2024-05-02 19:54:27.141000 UTC

~ Service Interface(s) ~

None.

~ Core Interface(s) ~

None.

~ Failed Path(s) ~

None.

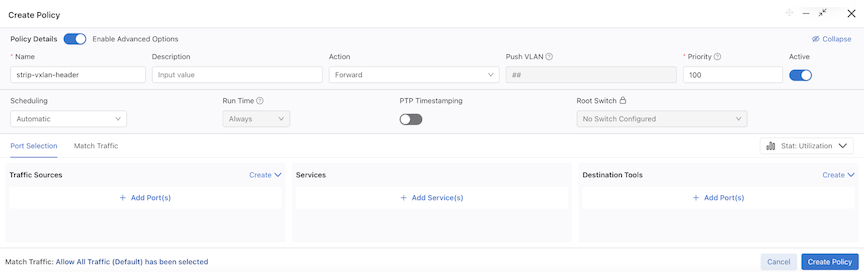

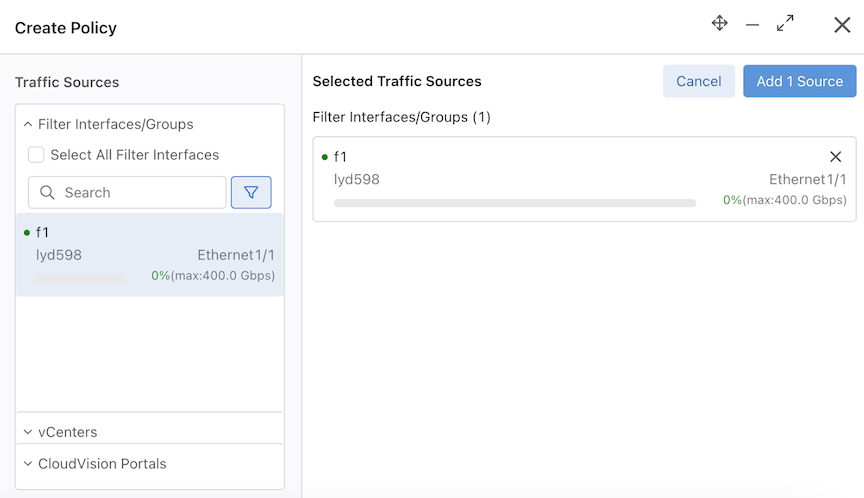

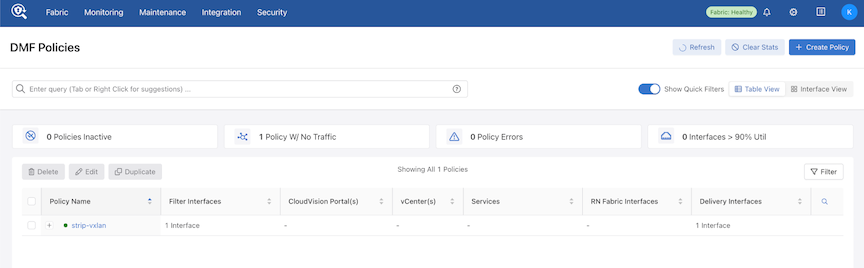

Using the GUI to Configure VXLAN Header Stripping

Filter Interface Configuration

To configure or edit a filter interface, proceed to Interfaces from the Monitoring menu and select .

Configure a filter interface on a switch that supports strip-vxlan.

Enable or Disable Strip VXLAN.

Policy Configuration

Create a new policy using DMF Policies and add the filter interface with strip VXLAN enabled.

Select Add port(s) under the Traffic Sources option and add the Filter Interface.

Add another delivery interface and create the policy.

Syslog Messages

There are no syslog messages associated with this feature.

Troubleshooting

The show switch all property command provides the Strip Header Supported property of the switch. If the value BSN_STRIP_HEADER_CAPS_VXLAN is present, the feature is supported; otherwise, the switch does not support this feature.

The show fabric warnings feature-unsupported-on-device command provides information when DMF fails to enable strip-vxlan on an unsupported switch.

The show switch switch-name table strip-vxlan-header command provides the gentable details.

The following are examples of several failure cases:

- The filter interface is down.

- The interface with

strip-vxlanis neither a filter interface nor a filter-and-delivery interface. - The switch does not support

strip-vxlan. - Tunneling / UDF is enabled simultaneously with

strip-vxlan. - Unsupported pipeline mode with

strip-vxlanenabled (strip-vxlanrequires a specific pipeline modestrip-vxlan-match-push-vlan).

Limitations

- When configured for a supported switch, the filter interface for

decap-vxlanaction can only be a physical interface or a LAG. - It is not possible to enable

strip-vxlansimultaneously with tunneling / UDF. - When enabling

strip-vxlanon one or more switch interfaces on the same switch, other filter interfaces on the same switch cannot be matched on the VXLAN header.

Session Slicing for TCP and UDP Sessions

Session-slice keeps track of TCP and UDP sessions (distinguished by source and destination IP address and port) and counts the number of packets sent in each direction (client-to-server and vice versa). After recognizing the session, the action transmits a user-configured number of packets to the tool node.

For TCP packets, session-slice tracks the number of packets sent in each direction after establishing the TCP handshake. Slicing begins after the packet count in a direction has reached the configured threshold in both directions.

For UDP packets, slicing begins after reaching the configured threshold in either direction.

By default, session-slice will operate on both TCP and UDP sessions but is configurable to operate on only one or the other.

Refer to the DANZ Monitoring Fabric (DMF) Verified Scale Guide for session-slicing performance numbers.

Configure session-slice in managed services through the Controller as a Service Node action.

Using the CLI to Configure Session Slicing

Configure session-slice in managed services through the Controller as a Service Node action.

Configuration Steps

The following show commands provide helpful information.

show running-config managed-service managed

service command helps verify whether the session-slice configuration is complete.

dmf-controller-1(config)# show running-config managed-service managed_service_1

! managed-service

managed-service managed_service_1

!

1 session-slice

slice-after 1000

idle-timeout 60000show managed-services managed service command provides status information about the service.

dmf-controller-1(config)# show managed-services managed_service_1

# Service NameSwitchSwitch Interface Installed Max Post-Service BW Max Pre-Service BW Total Post-Service BW Total Pre-Service BW

-|-----------------|---------------|----------------|---------|-------------------|------------------|---------------------|--------------------|

1 managed_service_1 DCS-7050CX3-32S ethernet2/4True25Gbps25Gbps 624Kbps 432Mbps

Using the GUI to Configure Session Slicing

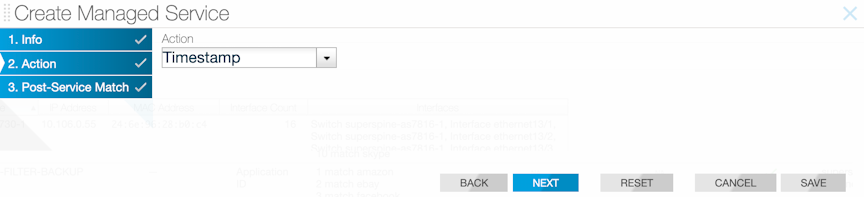

Timestamp Action

The timestamp service action identifies and timestamps every packet it receives with the time the service node receives the packet for matching traffic.

GUI Configuration

CLI Configuration

! managed-service

managed-service MS-TIMESTAMP-1

1 timestamp

service-interface switch CORE-SWITCH-1 ethernet15/3UDP-replication Action

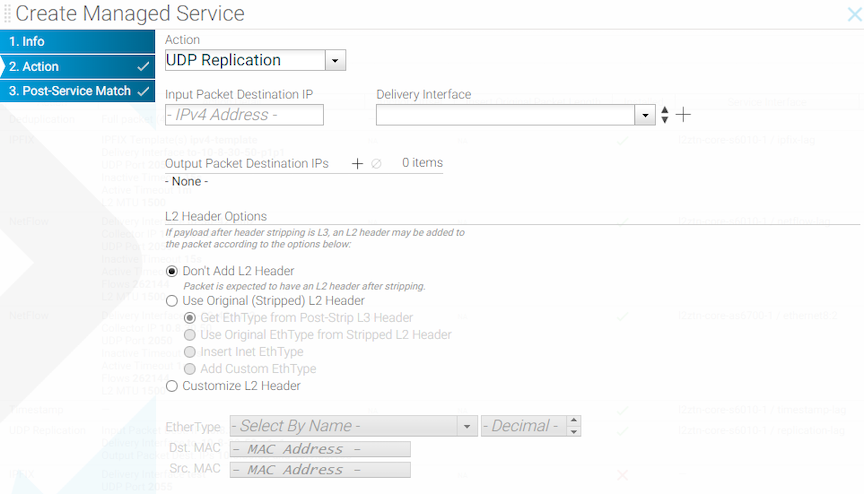

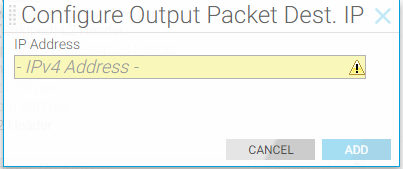

The UDP-replication service action copies UDP messages, such as Syslog or NetFlow messages, and sends the copied packets to a new destination IP address.

Configure a rate limit when enabling UDP replication. When upgrading from a version of DANZ Monitoring Fabric (DMF) before release 6.3.1, the UDP-replication configuration is not applied until a rate limit is applied to the delivery interface.

CONTROLLER-1(config)# switch DMF-DELIVERY-SWITCH-1

CONTROLLER-1(config-switch)# interface ethernet1

CONTROLLER-1(config-switch-if)# role delivery interface-name udp-delivery-1

CONTROLLER-1(config-switch-if)# rate-limit 256000GUI Configuration

Use the UDP-replication service to copy UDP traffic, such as Syslog messages or NetFlow packets, and send the copied packets to a new destination IP address. This function sends traffic to more destination syslog servers or NetFlow collectors than would otherwise be allowed.

For the header-strip service action only, configure the policy rules for matching traffic after applying the header-strip service action. After completing pages 1-4, click Append and enable the checkbox to apply the policy.

Click Save to save the managed service.

CLI Configuration

controller-1(config)# managed-service MS-UDP-REPLICATE-1

controller-1(config-managed-srv)# 1 udp-replicate DELIVERY-INTF-TO-COLLECTOR

controller-1(config-managed-srv-udp-replicate)#controller-1(config-managed-srv-udp-replicate)# in-dst-ip 10.1.1.1

controller-1(config-managed-srv-udp-replicate)# out-dst-ip 10.1.2.1Redundancy of Managed Services in Same DMF Policy

Using the GUI to Configure a Backup Managed Service

Using the CLI to Configure a Backup Managed Service

Application Identification

Using the CLI to Configure Application Identification

Configure the feature through the Controller in managed services.

Using the CLI to Configure app-id

managed-service ms

service-interface switch core1 ethernet2

!

1 app-id

collector 192.168.1.1

l3-delivery-interface app-to-analyticsAfter configuring the app-id, refer to the analytics node for application reports and visualizations. For instance, a flow is classified internally with the following tuple: ip, tcp, http, google, and google_maps. Consequently, the analytics node displays the most specific app ID for this flow as google_maps under appName.

On the Analytics Node, there are AppIDs 0-4 representing applications according to their numerical IDs. 0 is the most specific application identified in that flow, while 4 is the least. In the example above, ID 0 would be the numerical ID for google_maps, ID 1 google, ID 2 http, ID 3 tcp, and ID 4 IP address. Use the appName in place of these since these require an ID to name mapping to interpret.

Using the CLI to Configure app-id-filter

Using the CLI to Configure app-id and app-id-filter Combined

Follow the configuration steps described in the services earlier to configure app-id-filter and app-id together. However, in this case, app-id should use a higher seq num than app-id-filter. Thus, the traffic is processed through the app-id-filter policy first, then through app-id.

app-id-filter and app-id configuration.

! managed-service

managed-service MS1

service-interface switch CORE-SWITCH-1 ethernet2

!

!

1 app-id-filter

app facebook

filter-mode forward

!

2 app-id

collector 1.1.1.1

l3-delivery-interface L3-INTF-1app-id dropping all traffic except facebook, and this type of service chaining can cause a performance hit and high memory utilization.Using the GUI to Configure app-id and app-id-filter

Dynamic Signature Updates (Beta Version)

- fetch: after a successful fetch on the active Controller, it invokes the fetch RPC on the standby Controller by providing a signed HTTP URL as the source. This URL points to an internal REST API that provides the recently fetched signature file.

- delete: the active Controller invokes the delete RPC call on the standby controllers.

The Controller stores the signature files in this location: /var/lib/capture/appidsignatureupdate.

- Verify the bundle version on the service node by entering the

show service-node app-id-bundle-versioncommand in the service node CLI, as shown below.Figure 64. Before Update

Figure 64. After Update

CLI Show Commands

Service Node

show command:

show service-node app-id-bundle-versionapp-id or app-id-filter instance must be configured, or an error message is displayed.

dmf-servicenode-1# show app-id bundle-version

Name : bundle_version

Data : 1.680.0-22 (build date Sep 26 2023)

dmf-servicenode-1#Controller

To obtain more information about the running version on a Service Node, or when the last push attempt was made and the outcome, use the following Controller CLI commands:

show app-id push-results optional SN nameshow service-node SN name app-id

dmf-controller-1# show app-id push-results

# NameIP Address Current Version Current Push TimePrevious Version Previous Push Time Last Attempt Version Last Attempt TimeLast Attempt Result Last Attempt Failure Reason

-|-----------------|--------------|---------------|------------------------------|----------------|------------------------------|--------------------|------------------------------|-------------------|---------------------------|

1 dmf-servicenode-1 10.240.180.124 1.660.2-332023-12-06 11:13:36.662000 PST 1.680.0-22 2023-09-29 16:21:11.034000 PDT 1.660.2-33 2023-12-06 11:13:34.085000 PST successdmf-controller-1# show service-node dmf-servicenode-1 app-id

# NameIP Address Current Version Current Push TimePrevious Version Previous Push Time Last Attempt Version Last Attempt Time Last Attempt Result Last Attempt Failure Reason

-|-----------------|--------------|---------------|------------------------------|----------------|------------------|--------------------|-----------------|-------------------|---------------------------|

1 dmf-servicenode-1 10.240.180.124 1.680.0-222023-09-29 16:21:11.034000 PDTshow app-id signature-files command displays the validated files that are available to push to Service Nodes.

dmf-controller-1# show app-id signature-files

# Signature-fileChecksum Fetch time

-|-----------------|-----------------|------------------------------|

1 file1.tar.gzabcdefgh12345 2023-08-02 22:20:49.422000 UTC

2 file2.tar.gzijklmnop67890 2023-08-03 07:10:22.123000 UTCshow analytics app-info filter-interface-name command displays aggregated information over the last 5 minutes about the applications seen on a given filter interface, sorted by unique flow count. This command also has an optional size option to limit the number of results, default is all.

push-per-filter mode.dmf-controller-1# show analytics app-info filter-interface f1 size 3

# App name Flow count

-|--------|----------|

1 app1 1000

2 app2 900

3 app3 800Syslog Messages

Syslog messages for configuring the app-id and app-id-filter services appear in a service node’s syslog through journalctl.

A Service Node syslog registers events for the app-id add, modify, and delete actions.

These events contain the keywords dpi and dpi-filter, which correspond to app-id and app-id-filter.

For example:

Adding dpi for port,

Modifying dpi for port,

Deleting dpi for port,

Adding dpi filter for port,

Modifying dpi filter for port,

Deleting dpi filter for port,

App appname does not exist - An invalid app name was entered.The addition, modification, or deletion of app names in an app-id-filter managed-service in the Controller node’s CLI influences the policy refresh activity, and these events register in floodlight.log.

Scale

- Max concurrent sessions are currently set to permit less than 200,000 active flows per core. Performance may drop the more concurrent flows there are. This value is a maximum value to prevent the service from overloading. Surpassing this threshold may cause some flows not to be processed, and the new flows will not be identified or filtered. Entries for inactive flows will time out after a few minutes for ongoing sessions and a few seconds after the session ends.

- If there are many inactive sessions, DMF holds the flow contexts, reducing the number of available flows used for DPI. The timeouts are approximately 7 minutes for TCP sessions and 1 minute for UDP.

- Heavy application traffic load degrades performance.

Troubleshooting

- If IPFIX reports do not appear on an Analytics Node (AN) or Collector, ensure the UDP port is configured correctly and verify the AN receives traffic.

- If the

app-id-filterapp list does not appear, ensure a Service Node (SN) is connected using the show service-node command on the Controller. - For

app-id-filter, enter at least one valid application from the list that appears using <Tab>. If not, the policy will fail to install with an error message app-id-filter specified without at least one name TLV identifying application. - A flow may contain other IDs and protocols when using

app-id-filter.For example, the specific application for a flow may begoogle_maps, but there may be protocols or broader applications under it, such asssh,http, orgoogle. Addinggoogle_mapswill filter this flow. However, addingsshwill also filter this flow. Therefore, adding any of these to the filter list will cause packets of this flow to be forwarded or dropped. - An IPFIX element, BSN type 14, that existed in DMF version 8.4 was removed in 8.6.

- During a dynamic signature update, if a SN reboot occurs, it will likely boot up with the correct version. To avoid issues of traffic loss, perform the update during a maintenance window. Also, during an update, the SN will temporarily not send LLDP packets to the Controller and disconnect for a short while.

- After a dynamic signature update, do not change configurations or push another signature file for several minutes. The update will take some time to process. If there are any VFT changes, it may lead to warning messages in floodlight, such as:

Sync job 2853: still waiting after 50002 ms Stuck switch update: R740-25G[00:00:e4:43:4b:bb:38:ca], duration=50002ms, stage=COMMITThese messages may also appear when configuring DPI on a large number of ports.

Limitations

- When using a drop filter, a few packets may slip through the filter before determining an application ID for a flow, and when using a forward filter, a few packets may not be forwarded. Such a small amount is estimated to be between 1 and 6 packets at the beginning of a flow.

- When using a drop filter, add the

unknownapp ID to the filter list to drop any unidentified traffic if these packets are unwanted. - The Controller must be connected to a Service Node for the

app-id-filterapp list to appear. If the list does not appear and the application names are unknown, use theapp-idto send reports to the analytics node. Use the application names seen there to configure anapp-id-filter. The name must match exactly. - Since

app-categorydoes not currently show the applications included in that category, do not use it when targeting specific apps. Categories like basic, which include all basic networking protocols like TCP and UDP, may affect all flows. - For

app-id, a report is only generated for a fully classified flow after that flow has been fully classified. Therefore, the number of reported applications may not match the total number of flows. These reports are sent after enough applications are identified on the Service Node. If many applications are identified, DMF sends the reports quickly. However, DMF sends these reports every 10 seconds when identifying only a few applications. - DMF treats a bidirectional flow as part of the same n-tuple. As such, generated reports contain the client's source IP address and the server's destination IP address.

- While configuring many ports with the

app-id, there may occasionally be a few Rx drops on the 16 port machines at a high traffic rate in the first couple of seconds. - The feature uses a cache that maps dest ip and port to the application. Caching may vary the performance depending on the traffic profile.

- The

app-idandapp-id-filterservices are more resource-intensive than other services. Combining them in a service chain or configuring many instances of them may lead to degradation in performance. - At scale, such as configuring 16 ports on the R740 DCA-DM-SEL,

app-idmay take a few minutes to set up on all these ports, and this is also true when doing a dynamic signature update. - The

show analytics app-infocommand only works inpush-per-filterVLAN mode.

Redundancy of Managed Services Using Two DMF Policies

In this method, users can employ a second policy with a second managed service to provide redundancy. The idea here is to duplicate the policies but assign a lower policy priority to the second DANZ Monitoring Fabric (DMF) policy. In this case, the backup policy (and, by extension, the backup service) will always be active but only receive relevant traffic once the primary policy goes down. This method provides true redundancy at the policy, service-node, and core switch levels but uses additional network and node resources.

! managed-service

managed-service MS-SLICE-1

1 slice l3-header-start 20

service-interface switch CORE-SWITCH-1 lag1

!

managed-service MS-SLICE-2

1 slice l3-header-start 20

service-interface switch CORE-SWITCH-1 lag2

! policy

policy ACTIVE-POLICY

priority 101

action forward

delivery-interface TOOL-PORT-1

filter-interface TAP-PORT-1

use-managed-service MS-SLICE-1 sequence 1

1 match ip

!

policy BACKUP-POLICY

priority 100

action forward

delivery-interface TOOL-PORT-1

filter-interface TAP-PORT-1

use-managed-service MS-SLICE-2 sequence 1

1 match ipCloud Services Filtering

The DANZ Monitoring Fabric (DMF) supports traffic filtering to specific services hosted in the public cloud and redirecting filtered traffic to customer tools. DMF achieves this functionality by reading the source and destination IP addresses of specific flows, identifying the Autonomous System number they belong to, tagging the flows with their respective AS numbers, and redirecting them to customer tools for consumption.

The following is the list of services supported:

- amazon: traffic with src/dst IP belonging to Amazon

- ebay: traffic with src/dst IP belonging to eBay

- facebook: traffic with src/dst IP belonging to FaceBook

- google: traffic with src/dst IP belonging to Google

- microsoft: traffic with src/dst IP belonging to Microsoft

- netflix: traffic with src/dst IP belonging to Netflix

- office365: traffic for Microsoft Office365

- sharepoint: traffic for Microsoft Sharepoint

- skype: traffic for Microsoft Skype

- twitter: traffic with src/dst IP belonging to Twitter

- default: traffic not matching other rules in this service. Supported types are match or drop.

The option drop instructs the DMF Service Node to drop packets matching the configured application.

The option match instructs the DMF Service Node to deliver packets to the delivery interfaces connected to the customer tool.

match default. It instructs the DMF Service Node to drop packets when either of the following conditions occurs:

- The stream's source IP address or destination IP address doesn't belong to any AS number.

- The stream's source IP address or destination IP address is affiliated with an AS number but has no specific action set.

Cloud Services Filtering Configuration

Controller(config)# managed-service <name>

Controller(config-managed-srv)#Controller(config-managed-srv)# 1 app-filter

Controller(config-managed-srv-appfilter)#Controller(config-managed-srv-appfilter)# 1 drop sharepoint

Controller(config-managed-srv-appfilter)# 2 match google

Controller(config-managed-srv-appfilter)# show this

! managed-service

managed-service sf3

service-interface switch CORE-SWITCH-1 ethernet13/1

!

1 service- app-filter

1 drop sharepoint

2 match googleController(config)# show running-config managed-service incomplete-managed-service

! managed-service

managed-service incomplete-managed-service

1 app-filter

Controller(config)# show running-config policy R730-sf3

! policy

policy incomplete-policy

action forward

delivery-interface TOOL-PORT-1

filter-interface TAP-PORT-1

use-managed-service incomplete-managed-service sequence 1

1 match anyController(config-managed-srv-appfilter)# show policy incomplete-policy

Policy Name : incomplete-policy

Config Status : active - forward

Runtime Status : one or more required service down

Detailed Status : one or more required service down - installed to

forward

Priority : 100

Overlap Priority : 0Multiple Services Per Service Node Interface

The service-node capability is augmented to support more than one service action per service-node interface. Though this feature is economical regarding per-interface cost, it could cause packet drops in high-volume traffic environments. Arista Networks recommends using this feature judiciously.

controller-1# show running-config managed-service Test

! managed-service

managed-service Test

service-interface switch CORE-SWITCH-1 ethernet13/1

1 dedup full-packet window 2

2 mask BIGSWITCH

3 slice l4-payload-start 0

!

4 netflow an-collector

collector 10.106.6.15 udp-port 2055 mtu 1500

- The NetFlow/IPFIX-action configuration should not be followed by the timestamp service action.

- Ensure the UDP-replication action configuration is the last service in the sequence.

- The header-stripping service with post-service-match rule configured should not be followed by the NetFlow, IPFIX, udp-replication, timestamp and TCP-analysis services.

- When configuring a header strip and slice action, the header strip action must precede the slice action.

Sample Service

The Service Node forwards packets based on the max-tokens and tokens-per-refresh parameters using the DANZ Monitoring Fabric (DMF) Sample Service feature. The sample service uses one token to forward one packet.

After consuming all the initial tokens from the max-tokens bucket, the system drops subsequent packets until the max-tokens bucket refills using the tokens-per-refresh counter at a recurring predefined time interval of 10ms. Packet sizes do not affect this service.

Arista Networks recommends keeping the tokens-per-refresh value at or below max-tokens. For example, max-tokens =

1000 and tokens-per-refresh = 500.

Setting the max-tokens value to 1000 means that the initial number of tokens is 1000, and the maximum number of tokens stored at any time is 1000.

The max-tokens bucket will be zero when the Service Node has forwarded 1000 packets before the first 10 ms period ends, leading to a situation where the Service Node is no longer forwarding packets. After every 10ms time interval, if the tokens-per-refresh value is set to 500, the max-tokens bucket is refilled using the tokens-per-refresh configured value, 500 tokens in this case, to pass packets the service tries to use immediately.

Suppose the traffic rate is higher than the refresh amount added. In that case, available tokens will eventually drop back to 0, and every 10ms, only 500 packets will be forwarded, with subsequent packets being dropped.

If the traffic rate is lower than the refresh amount added, a surplus of tokens will result in all packets passing. Since the system only consumes some of the tokens before the next refresh interval, available tokens will accumulate until they reach the max-tokens value of 1000. After 1000, the system does not store any surplus tokens above the max-tokens value.

To estimate the maximum possible packets passed per second (pps), use the calculation (1000ms/10ms) * tokens-per-refresh and assume the max-tokens value is larger than tokens-per-refresh. For example, if the tokens-per-refresh value is 5000, then 500000 pps are passed.

The Sample Service feature can be used as a standalone Managed Service or chained with other Managed Services.

Use Cases and Compatibility

- Applies to Service Nodes

- Limit traffic to tools that cannot handle a large amount of traffic.

- Use the Sample Service before another managed service to decrease the load on that service.

- The Sample Service is applicable when needing only a portion of the total packets without specifically choosing which packets to forward.

Sample Service CLI Configuration

Show Commands

Use the show running-config managed-service sample_service_name command to view pertinent details. In this example, the sample_service_name is techpubs.

DMF-SCALE-R450> show running-config managed-service techpubs

! managed-service

managed-service techpubs

!

1 sample

max-tokens 1000

tokens-per-refresh 500

DMF-SCALE-R450>Sample Service GUI Configuration

Troubleshooting Sample Service

Troubleshooting

- If the number of packets forwarded by the Service Node interfaces is few, the

max-tokensandtokens-per-refreshvalues likely need to be higher. - If fewer packets than the

tokens-per-refreshvalue forward, ensure themax-tokensvalue is larger than thetokens-per-refreshvalue. The system discards any surplus refresh tokens above themax-tokensvalue. - When all traffic forwards, the initial

max-tokensvalue is too large, or the tokens refreshed bytokens-per-refreshare higher than the packet rate. - When experiencing packet drops after the first 10ms post commencement of traffic, it may be due to a low

tokens-per-refreshvalue. For example, calculate the minimum value ofmax-tokensandtokens-per-refreshthat would lead to forwarding all packets.

Calculation Example

Traffic Rate : 400 Mbps

Packet Size - 64 bytes

400 Mbps = 400000000 bps

400000000 bps = 50000000 Bps

50000000 Bps = 595238 pps (Includes 20 bytes of inter packet gap in addition to the 64 bytes)

1000 ms = 595238 pps

1 ms = 595.238 pps

10 ms = 5952 pps

max-tokens : 5952 (the minimum value)

tokens-per-refresh : 5952 ( the minimum value)Limitations

- In the current implementation, the Service Sample action is bursty. The token consumption rate is not configured to withhold tokens over time, so a large burst of incoming packets can immediately consume all the tokens in the bucket. There is currently no way to select what traffic is forwarded or dropped; it only depends on when the packets arrive concerning the refresh interval.

- Setting the

max-tokensandtokens-per-refreshvalues too high will forward all packets. The maximum value is9,223,372,036,854,775,807, but Arista Networks recommends staying within the maximum values stated under the description section.

Flow Diff Latency and Drop Analysis

Latency and drop information help determine if there is a loss in a particular flow and where the loss occurred. A Service Node action configured as a DANZ Monitoring Fabric (DMF) managed service has two separate taps or spans in the production network and can measure the latency of a flow traversing through these two points. It can also detect packet drops between two points in the network if the packet only appears on one point within a specified time frame, currently set to 100ms.

Latency and drop analysis require PTP time-stamped packets. The DMF PTP timestamping feature can do this as the packets enter the monitoring fabric, or the production network switches can also timestamp the packet.

The Service Node accumulates latency values by flow and sends IPFIX data records with each flow's 5-tuple and ingress and egress identifiers. It sends IPFIX data records to the Analytics Node after collecting a specified number of values for a flow or when a timeout occurs for the flow entry. The threshold count is 10,000, and the flow timeout is 4 seconds.

Use the DMF Analytics Node to build custom dashboards to view and check the data.

Configure Flow Diff Latency and Drop Analysis Using the CLI

flow-diff.

Latency configuration configures multiple tap point pairs to analyze latency or drops between which analysis of latency or drops occurs. A tap point pair comprises a source and a destination tap point, identified by the filter interface, policy name, or filter interface group. Based on the latency configuration, configuring the Service Node with traffic metadata tells the Service Node where to look for tap point information, timestamps, and the IPFIX collector.

Configure appropriate DMF Policies such that traffic tapped from tap point pairs in the network is delivered to the configured Service Node interface for analysis.

Configuration Steps for flow-diff.

flow-diff using the steps mentioned earlier:

dmf-controller-1(config)# managed-service managed_service_1

dmf-controller-1(config-managed-srv)# service-interface switch delivery1 ethernet1

dmf-controller-1(config-managed-srv)# 1 flow-diff

dmf-controller-1(config-managed-srv-flow-diff)# collector 192.168.1.1

dmf-controller-1(config-managed-srv-flow-diff)# l3-delivery-interface l3-iface-1

dmf-controller-1(config-managed-srv-flow-diff)# tap-point-pair source filter-interface f1 destination filter-interface f2

dmf-controller-1(config-managed-srv-flow-diff)# latency-table-size small|medium|large

dmf-controller-1(config-managed-srv-flow-diff)# packet-timeout 100

dmf-controller-1(config-managed-srv-flow-diff)# flow-timeout 4000

dmf-controller-1(config-managed-srv-flow-diff)# sample-count-threshold 10000Configuring Tap Points

tap-point-pairflow-diff submode specifying three identifiers: filter interface name, policy

name, and filter-interface-groupdmf-controller-1(config-managed-srv-flow-diff)# tap-point-pair source <Tab>

filter-interface policy-namefilter-interface-groupBoth source and destination tap points must use identifiers compatible; policy-name cannot be used with filter-interface or filter-interface-group.

The filter-interface-group option takes in any configured filter interface group used to represent a collection of tap points in push-per-filter mode. This is an optional command to use when a group of tap points exists, all expecting traffic from the same source or group of source tap points for ease of configuration. For example:

- Instead of having two separate

tap-point-pairsto represent A → B, A → C, use afilter-interface-groupG= [B, C], and only one tap-point-pair A → G.dmf-controller-1(config-managed-srv-flow-diff# tap-point-pair source type A destination filter-interface-group G - With a topology like A → C and B → C, configure a

filter-interface-groupG= [A, B], andtap-point-pairG → C.dmf-controller-1(config-managed-srv-flow-diff # tap-point-pair source filter-interface-group G destination type C

There are some restrictions to keep in mind while configuring tap-point-pairs:

sourceanddestinationmust exist and cannot refer to the same tap point- You can only configure a maximum of 1024 tap points, therefore a maximum of 512 tap-point-pairs. These limits are not for a single flow-diff managed service but across all managed services with flow-diff action.

filter-interface-groupmust not overlap with other groups within the same managed service and cannot have more than 128 members.- When configuring multiple

tap-point-pairs usingfilter-interfaceandfilter-interface-group, an interface part of a filter interface group cannot be used simultaneously as an individual source and destination within the same managed service.

There are several caveats on what is accepted based on the VLAN mode:

Push per Filter

- Use the

filter-interfaceoption to provide the filter interface name. - Use the

filter-interface-groupoption to provide the filter interface group name. - The

policy-nameidentifier is invalid in this mode.

Push per Policy

- Accepts a

policy-nameidentifier as a tap point identifier. - The

filter-interfaceandfilter-interface-groupidentifiers are invalid in this mode. - Policies configured as

sourceanddestinationtap points within atap-point-pairmust not overlap.

Configuring Policy

Irrespective of the VLAN mode, configure a policy or policies so that the same packet can be tapped from two independent points in the network and then sent to the Service Node.

After creating a policy, add the managed service with flow-diff action as shown below:

dmf-controller-1 (config-policy)# use-managed-service service name sequence 1There are several things to consider while configuring policies depending on the VLAN mode:

Push per Filter

- Only one policy can contain the

flow-diffservice action. - A policy should have all

filter-interfacesandfilter-interface-groupsconfigured as tap points in the flow-diff configuration. Missing filter interfaces and groups from policy may result in drops being reported when in reality we simply won’t be forwarding the packets from one end of thetap-point-pairto the Service Node. - It’s also advisable to not add any filter interfaces (groups) that are not in the tap-point-pairs as their latency and drop analysis will not be done, so it will cause unnecessary packets forwarded to the Service Node, which will be reported as unexpected.

Push per Policy

- Add the flow-diff service to two policies when using

policy-nameas source and destination identifiers. In this case, there are no restrictions on how many filter interfaces a policy can have. - A policy configured as one of the tap points will fail if it overlaps with the other policy in a tap-point-pair, or if the other policy does not exist.

dmf-controller-1 (config-policy)# use-timestampingConfiguring PTP Timestamping

This feature depends on configuring PTP timestamping for the packet stream going through the tap points. Refer to the Resources section for more information on setting up PTP timestamping functionality.

Show Commands

The following show commands provide helpful information.

show running-config managed-service managed

service command helps check whether the flow-diff configuration is complete.

dmf-controller-1(config)# show running-config managed-service flow-diff

! managed-service

managed-service flow-diff

service-interface switch DCS-7050CX3-32S ethernet2/4

!

1 flow-diff

collector 192.168.1.1

l3-delivery-interface AN-Data

tap-point-pair source filter-interface ingress destination filter-interface egress

show managed-services managed service command provides status information about the service.

dmf-controller-1(config)# show managed-services flow-diff

# Service Name SwitchSwitch Interface Installed Max Post-Service BW Max Pre-Service BW Total Post-Service BW Total Pre-Service BW

-|------------|---------------|----------------|---------|-------------------|------------------|---------------------|--------------------|

1 flow-diffDCS-7050CX3-32S ethernet2/4True25Gbps25Gbps 624Kbps 432MbpsThe show running-config policy policy command checks whether the policy flow-diff service exists, whether use-timestamping is enabled, and the use of the correct filter interfaces.

The show policy policy command provides detailed information about a policy and whether any errors are related to the flow-diff service. The Service Interfaces tab section shows the packets transmitted to the Service Node and IPFIX packets received from the Service Node.

dmf-controller-1 (config)# show policy flow-diff-1

Policy Name: flow-diff-1

Config Status: active - forward

Runtime Status : installed

Detailed Status: installed - installed to forward

Priority : 100

Overlap Priority : 0

# of switches with filter interfaces : 1

# of switches with delivery interfaces : 1

# of switches with service interfaces: 1

# of filter interfaces : 1

# of delivery interfaces : 1

# of core interfaces : 4

# of services: 1

# of pre service interfaces: 1

# of post service interfaces : 1

Push VLAN: 2

Post Match Filter Traffic: 215Mbps

Total Delivery Rate: -

Total Pre Service Rate : 217Mbps

Total Post Service Rate: -

Overlapping Policies : none

Component Policies : none

Runtime Service Names: flow-diff

Installed Time : 2023-11-16 18:15:27 PST

Installed Duration : 19 minutes, 45 secs

~ Match Rules ~

# Rule

-|-----------|

1 1 match any

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Filter Interface(s) ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

# DMF IF Switch IF NameState Dir PacketsBytes Pkt Rate Bit Rate Counter Reset Time

-|------|--------|----------|-----|---|--------|-----------|--------|--------|------------------------------|

1 BP17280SR3E Ethernet25 uprx24319476 27484991953 23313215Mbps2023-11-16 18:18:18.837000 PST